[Scroll]

2025 Wrapped

Building Panjabi for the age of intelligence.

A Year in Review

A Year of Foundation

Building the lexical, semantic, and computational foundations of the Panjabi language for artificial intelligence and future systems.

Lexical Coverage

Systematic identification, curation, and validation of Panjabi vocabulary, including headwords, inflected forms, attested usage, and high-frequency multi-word expressions derived from corpus-level n-gram analysis, with an emphasis on consistency, completeness, and long-term extensibility.

Semantic Depth

Development of structured meaning representations, including sense differentiation, contextual usage, and semantic relationships, enabling accurate interpretation beyond surface-level word equivalence.

Script Coverage

Aligned representation of Panjabi across Gurmukhi and Shahmukhi scripts, addressing orthographic variation, normalization, and cross-script interoperability while preserving linguistic integrity.

Pronunciation & Phonetics

Collection and structuring of pronunciation and phonetic data to accurately model spoken Panjabi, supporting learners, educators, and speech-capable language systems across regional and dialectal variation.

Computational Readiness

Preparation of linguistic resources for computational use through structured datasets, annotation standards, and internal pipelines suitable for NLP systems and multimodal language models, without compromising linguistic rigor.

Significant Milestones

Corpus Expansion

-

Built upon foundational Panjabi lexicographic work that was necessarily based on corpora available up to the 1990s

-

Expanded and refined curated Panjabi text corpora to reflect linguistic developments of the subsequent decades

-

Incorporated a broader range of genres, registers, and sources to capture both historical continuity and contemporary usage

-

Prepared a scalable and updatable corpus foundation suitable for modern lexicographic, computational, and AI-era language analysis

Abugida Encoding Framework

-

Resolved long-standing incompatibilities arising from proprietary ASCII-based Panjabi font encodings

-

Designed and implemented a novel abugida-aware encoding and tokenization framework

-

Eliminated the need for manual, font-specific character mapping tables

-

Achieved robust handling of complex Gurmukhi features, including supplementary letters, nasalisation, visarg, subscripts, halant, and vowel diacritics

-

Validated the framework across multiple abugida-based scripts, extending applicability beyond Panjabi

RUP Pipeline

-

Designed and implemented a modular, three-layer document generation architecture comprising a content stream, rule-based layout layer, and pagination engine

-

Enabled large-scale generation of synthetic documents with precise word-, line-, and paragraph-level bounding box annotations

-

Unified pagination-aware templating with a layout engine that dynamically governs text flow, spacing, and page boundaries

-

Established a reusable, model-agnostic foundation for OCR training, document understanding, and layout-aware vision–language systems

Computational Pipelines

-

Established a complete, end-to-end vision–language model fine-tuning pipeline with checkpointing, adapter-based training, and deployment integration

-

Achieved repeatable and fault-tolerant training workflows through containerized environments and production-safe isolation

-

Optimized training performance via parallel data processing and attention-level improvements, significantly reducing end-to-end training time

-

Resolved critical convergence and stability issues, enabling reliable fine-tuning and improved loss characteristics

Evaluation & Benchmarking

-

Defined task-specific evaluation protocols for Panjabi OCR and document understanding workflows

-

Constructed curated validation and test sets aligned with real-world document distributions

-

Enabled consistent, repeatable comparison across model iterations and dataset revisions

-

Established a foundation for tracking progress, regressions, and research trade-offs over time

The work completed this year establishes a durable foundation for the next phase of Panjabi language research and infrastructure.

So much more is coming in 2026

[Q1/2026]

Release a research-grade Panjabi–English lexical resource with unified script normalization and machine-readable semantic structure.

[Q2/2026]

Extend the lexical infrastructure toward multilingual alignment, phonetic representation, and controlled programmatic access for research use.

[Q3/2026]

Introduce first Panjabi AI language products, powered by Akhar’s research pipelines.

[Q4/2026]

Stabilize shared research and developer infrastructure for Panjabi AI.

Looking Ahead:

Language Priorities for 2026

[1]

Conscious Impact

As Panjabi becomes increasingly represented in digital and AI systems, language development must be guided by deliberate responsibility, ensuring accuracy, inclusivity, and long-term cultural and scholarly integrity.

[2]

Linguistic Resilience

The structural integrity of Panjabi across scripts, encodings, and computational representations is essential to prevent fragmentation and preserve the language’s coherence as technologies evolve.

[3]

First-Class Digital Citizenship

Panjabi must be supported as a first-class language within digital and AI ecosystems, backed by native-grade lexical, semantic, and computational infrastructure rather than approximations or retrofits.

[4]

Research-Led Language Stewardship

Sustainable progress depends on research-driven stewardship, where shared platforms, reproducible methods, and long-term infrastructure take precedence over isolated tools or short-term applications.

Last But Not Least: The Moments

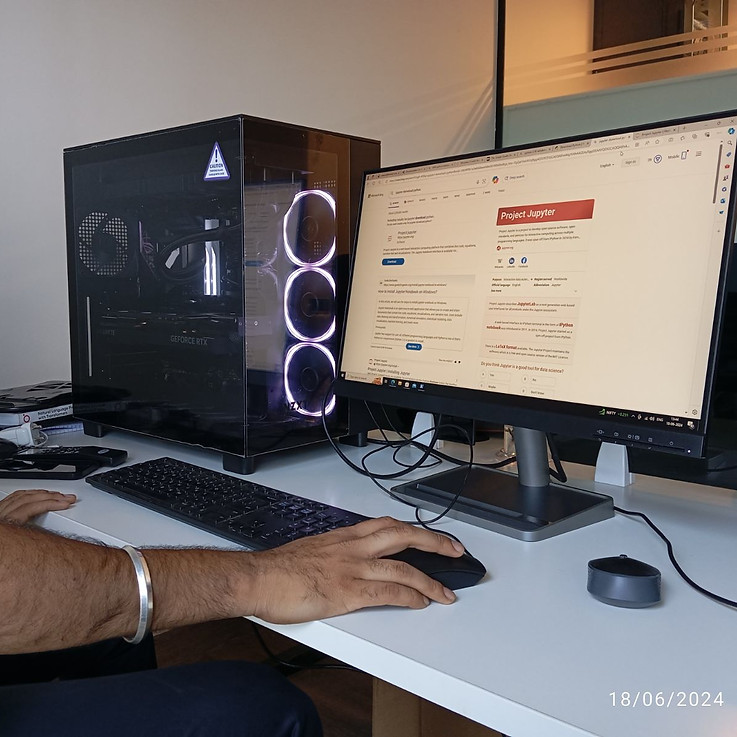

OUR FIRST NVIDIA RTX MACHINE

Volunteers building the first annotated datasets

Where it all started in 2024

Real-world language data collection

Assembling the foundations of the digital vault